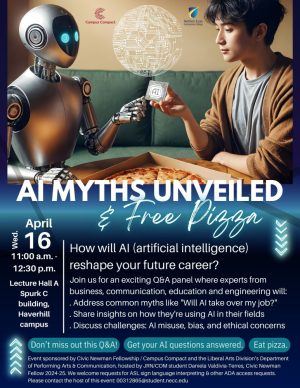

The first AI Panel Event in NECC was held on April 16, and it gathered staff, students and faculty in the Lecture Hall, all of them curious to ask questions about AI, artificial intelligence. Five panelists from different industries, using AI at their workplace, shared their experience and insights about AI. The public had the opportunity to engange in a conversation or share their concerns about AI by just simply raising their hand or through Padlet, an App that allows individuals to post their comments/questions online and those were shown on the screen.

The panelists were:

Angela Abad, an accomplished Digital Strategist, Creative Director, and Producer at El Mundo Boston, recognized for her leadership, creativity, and innovation in the media industry. Her work continues to shape the digital landscape and with a deep understanding of digital tools and emerging tech, she knows how to craft stories that captivate and connect.

David Kim, a software developer at MIT App Inventor. He is interested in the question, “What do we need to create so that kids can learn technology with ease?” David is working on the topic of Conversational AI and building no-code platforms. Other than developing, David enjoys interacting and guiding students to fulfill their goals at App Inventor.

Samantha Romano, a Senior Solutions Consultant at ServiceNow, where she works at the intersection of technology and strategy to help organizations leverage AI-powered solutions for enhanced business outcomes. Samantha’s combination of technical expertise and leadership skills positions her as a valuable voice in discussions about the future of AI in the workplace.

Peter Shea, Assistant Dean for AI Integration at Middlesex Community College in Massachusetts and a founding faculty member of the AAC&U Institute on AI, Pedagogy, and the Curriculum. In 2020, he founded a LinkedIn group focused on AI and education. He also co-edited Transforming Digital Learning and Assessment: A Guide to Available and Emerging Practices and Building Institutional Consensus (Routledge, 2021).

Don Vescio, Professor of English at Worcester State University and co-chairs the Massachusetts Artificial Intelligence Council for Higher Education (MACH), a collaboration among state universities and community colleges. With a background in English and the humanities, Don brings a distinctive perspective to emerging technologies, helping institutions navigate opportunities and challenges in a rapidly evolving digital environment.

And Rubi! A robot, that looked like a tiny dog, brought by David Kim stole smiles and wows from the public. This voice to action robot did several motions like waving or dance, after receiving a voice prompt through an App Inventor, an easy-to-use platform like dragging blocks, to create apps. Kim explained “in our lab (App Inventor Lab), we want to empower everyone to know they can create their own App like any App you use in your phone right now. Also, anyone can create and program a robot.” Kim and his team want to inspire and empower anyone, even kids, to access to technology to create and explore how they can improve their life through technology.

Assistant professor of Computer Information Sciences, Devan Walton, made the first round of questions. He asked David Kim about potential negative sides of the future of software industries using AI.

Kim said AI lowers the barriers for creating software or programming like passing scripts from one program to another one, for instance, from Java to Script, it used to be a hustle but now, Kim uses the aid of any LLM (large language model like ChatGPT) and asks it to do it and within seconds he gets it done. It may not be perfect but it provides a skeleton to work around it.

“It really shortens the time, it’s very useful but my concern is that these LLMs are learning from patterns of our data, they do not have their own consciousness. The problem is that software engineering is not only coding, coding is a methodology. The real thing is designing systems, it’s creating something, it’s not the syntax. It’s understanding the system of the whole and how the group works together, and this LLM models do not have that level of understanding. That’s what is really important for being a good software engineer.”

The question for Kim is, do the next generation of users of LLMs that are getting used to it, will they understand the system of coding based on their use of LLMs? Only time will tell.

Talking about the impact of AI in the workplace, Samantha Romano was asked about what industries will be more resistant to adapt to AI in the next years. Romano explained that with every evolution there are room for new jobs and new skills to develop.

“AI is not taking the job away; it is taking the job task away.” She remarked that your job will be vulnerable if you don’t apply AI in your workplace.

She added “If you are building the skills necessary for your job using AI, you don’t need to worry that your job will be obsolete.” And this applies to any field.

Another question was about how we balance the use of AI in education, Don Vescio took the lead. He said when he started to teach in academia, there was no internet, so he had seen the adaption of the internet and since a few years ago, the adaption of AI.

He reminds us we had more than three decades adapting to the internet while with the latter, the curve is arising too fast to catch up.

Vescio said “the first thing you need to know is your life is going to change and you need to embrace the change. AI in theory will allow us to automate a lot of things, to get additional insights in data or in operations that we normally would have to make educate guesses or pay high consulting to do so.”

Vescio shared a study made in 2024 and “found out that over 70% of global corporations now rely on AI for some of their operations. You need to know it. You need to accept it. You need to accept that your job, your workflow will change rapidly and frequently. You need to have flexibility and agility.”

In other words, you better learn how to use AI or how to integrate AI into your job.

Importantly Vescio talked about the risks of corporation using AI, and called us to make sure to design ethical frameworks. For instance, Amazon tracking system discovered with an internal audit, that there was a significant gender bias in its analysis of resumes for job positions. Similarly, heath care algorithms are dealing with issues with racism when processing data.

Related to AI bias, Angela Abad, explained that at the news media company it is excruciating daily. “The biggest problem we have with AI is in the creation of articles. We had people questioning if there is actually a human writing what they are reading,” said Abad. She explained they use it as fact-checking tool, but they have found out the information is not updated nor accurate. “There is the importance of having a human eye, a human input into every process…we still need people making sure everything is accurate,” stated Abad.

In contrast, Peter Shea was asked about unusual application of AI in teaching. Shea said he was impressed how some educators are integrating AI. For instance, he mentioned some educators created history video content using AI tools that inspire conversations in the classroom, mixing different pop culture in different periods or eras. This can be thought provoking and engages students with interesting questions. Another example was using AI to help students to overcome obstacles. Using a LLM, Shea looked for references how to explain the risk of diabetes using metaphors for Vietnamese people. With the aid of text-to-voice platform, he recorded his voice in English, and the program delivered a full text read by his “pretending” voice talking in fluent Vietnamese that informed about how about the risk of diabetes. “This is a good example how you can overcome obstacles. Particularly for students whom English is not their first language, and they have the cultural challenge,” said Shea.

The hour and a half were not enough to answer all questions of the audience. This means that we, the students, same as faculty and staff, are craving for having more of these interesting conversations.

Mary Jo Shafer, Journalism professor and NECC Observer adviser, stated being skeptical about AI. However, being curious about it, — mostly in support to this writer- — she said “It was an interesting conversation, it was a wonderful panel. I enjoyed hearing from the panelists, I appreciated their thoughts how AI will affect students in the workplace. I’m very pleased that panelists did not shy away from discussing the real ethical issues related to AI. Also, they emphasized the importance of digital literacy, well, also, letting students know some of the way students can use it.”

I organized this event as part of my Newman Civic Fellowship project which was sponsored by the Campus Compact organization. I pitched the idea and I applied for a mini-grant to make it happen.

My curiosity about AI and the uncertainty shown by my peers about the future of their careers vs. the future work space with AI, ignited in me the desire to do something about it. I would like to thank everyone who helped me by heart to make this project a reality, especially professor Kim Lyng and AI Task Force leader Susan Tashjian. However, most importantly, my wish is this event won’t be the one and only, instead I want to see it like the ice breaker and first of many conversations about AI, pros and cos, limitations and possibilities and how we can integrate it fast and efficiently.

As Peter Shea said, “community college students have better opportunity to integrate AI than four-year institutions because at community college, we adapt fast, we do a lot with little resources or without them.”

You can watch the full event here. Thanks to HC Media for the full coverage.